On Assisted Living of Paralyzed Persons through

Real-Time Eye Features Tracking and Classification using Support Vector

Machines

Type of article: Original

Qurban A Memon

Associate Professor

EE department, College

of Engineering, UAE University, 15551, Al-Ain, United Arab Emirates

Abstract

Background: The eye features

like eye-blink and eyeball movements can be used

as a module in assisted living systems that allow a class of physically

challenged people speaks – using their eyes. The objective of this work is to

design a real-time customized keyboard to be used by a physically challenged person to speak to the outside world, for

example, to enable a computer to read a story or a document, do gaming and

exercise of nerves, etc., through eye

features tracking

Method: In a paralyzed

person environment, the right-left, up-down eyeball movements act like a scroll

and eye blink as a nod. The eye features are tracked using Support Vector

Machines (SVMs).

Results: A prototype

keyboard is custom-designed to work with eye-blink detection and

eyeball-movement tracking using Support Vector Machines (SVMs) and tested in a

typical paralyzed person-environment under varied lighting conditions. Tests

performed on male and female subjects of different ages showed results with a

success rate of 92%.

Conclusions: Since the system

needs about 2 seconds to process one command, real-time

use is not required. The efficiency can be

improved through the use of a depth sensor camera, faster processor

environment, or motion estimation.

Keywords: Assisted living; Rehabilitation; Paralyzed persons;

Eye-blink detection; Eyeball detection; Biomedical engineering; SVM; Machine

learning; Image processing.

Corresponding author: Qurban A Memon,

EE department, College of Engineering, UAE University, 15551, Al-Ain

qurban.memon@uaeu.ac.ae

Received: 26 January,

2019, Accepted: 28 Mars, 2019, English editing: 04 Mars, 2019,Published: 01 April,

2019.

Screened by iThenticate..©2017-2019

KNOWLEDGE KINGDOM PUBLISHING.

1. Introduction

Human feature detection and tracking are

gaining more importance each day due to a wide variety of applications that can

be built. One application is constructing

interactive ways to communicate with Internet-enabled devices linked to people

with disabilities [1]. Commuting and communication are the main issues of these

patients. One such class of people with Tetra/quadriplegia face

even communication difficulties. Another class has

rehabilitative disabilities

(spinal cord injury, repetitive strain injury, etc.)

and motor disabilities (autism, cerebral palsy, Lou Gehrig's, and so forth). Historically,

techniques like Partner-Assisted Scanning (PAS) have been used to help these

people communicate. In this technique, the nurse/caregiver presents a set of

symbols (e.g., words, alphabets, pictures, letters) on a screen to the disabled

patient, observes the patient’s eye on the screen, and then determines

selection from among those symbols to express needs. Augmentative and Alternative

Communication (AAC) is a very general

term and is diversified into two types;

aided and unaided systems [2]. In aided systems, a tool or device (low or high

tech) is used to help communicate. The examples are pointing or touching

letters/pictures etc. on a screen to

speak for oneself. PAS is an example of this type of AAC. Some assistive technologies exist, for example, for children with autism to

communicate, and for people with Lou Gehrig's disease to stay connected to

family, friends, and fans. Nevertheless, the solutions are expensive, as

it requires two extremely high-quality

camera sensors to capture an image and

build a 3D model of the user's eyes to

figure out gaze point and eye location in space relative to track box

(computer). In unaided systems, signs, facial expressions or body language are used

to support communication. In some cases, combinations of both types are used to

convey expression.

The

advancement in the field of

communication, electronics, and biomedical area have changed the use of eyes.

Technology-assisted living using eye as a window to the world enables to

communicate, gain independence through eyes, and control of the environment. The gesture

recognition can also improve the brain functioning through exercise when such

affected people want to stimulate their brain using eye-blink and eyeball

movements. The

right brain and eyes are profoundly connected. As an example, right-left,

up-down eyeball

movements each held for few seconds can help sense colors and light. Beginning from this, the right brain's senses

begin to surface, and the person can start sensing warm feelings, odor and

pain. Through this eye training, the sensory faculties of the right brain start

to wake up. The researchers believe that brain exercise

could offer hope in cases of spinal cord injuries, strokes and other conditions

where doctors emphasize regain in strength, mobility, and independence. Solutions

built until today are not satisfactory as

they do not meet criteria of affordable cost together with technology-assisted

mobility and communication of the physically challenged person in the absence of a nurse.

This paper is divided into seven sections. Section 2 surveys healthcare domain approaches to

help physically challenged persons. Section 3 presents the development of a customized keyboard for

rehabilitation and assisted living of the paralyzed person. Section 4 presents

the technological components that work together with the keyboard. It includes

camera calibration for eyeball tracking of the paralyzed person. For

simplicity, the input device chosen is a webcam that captures pictures at a

frame rate of 30 with resolution of 1.5 Megapixels.

After eye-blink and eyeball tracking, the classification step is presented

to map these eyeball movements to keyboard symbols. The simulation results

conducted on test images taken from all ages and both genders are presented in Section 5, with a discussion in section six. The limitations of

this research are highlighted in section 7, which are followed by conclusions.

2. Related work

2.1 Internet and Healthcare

The Internet of Things (loT), Internet of Nano Things,

Internet of Medical Things (IOMT), and the Internet

of Everything (loE) are ways to

incorporate electrical or electronic devices connected via the Internet. Social

relationships are established among

objects, things, and people, and this is where social networking meets the IoT.

As far as applications are concerned, IoT may be added with management features

to link home environment, vehicle electronics, telephone lines, and domestic utility services to address

concerns of the neighborhood to enable the realization of smart cities. In

literature, as an example, [3] address these state-of-art technologies,

possible future expansion, and even merger of IoT, IoNT, and IoE.

It was reported in 2013 that there were two

Internet-connected devices for each person and predicted that by 2025, this

number would exceed six [4]. In a health-IoT

ecosystem, different distributed devices capture and share real-time medical

information and then communicate to private, or open clouds, to enable big data

analysis in several new forms in order to activate context dependent alarms,

priorities of applications [5]. In another work [6], the authors develop and

present an IoT based health monitoring system to manage emergencies, using a

toolkit for dynamic and real-time multiuser submissions. Architecture is also proposed by [7] for

tracking of patients, staff, and devices within hospitals - as a smart hospital

system integrating Radio Frequency Identification (RFID), smartphone and Wireless

Sensor Networks (WSN). The parameters sensed in this way are accessible by

local as well as remote users via a customized web service. The work in [8]

proposes an architecture of a healthcare system using personal healthcare

devices to enhance interoperability and reduce data loss. In a study done by

[9], a system is designed using Raspberry Pi to enable seamless monitoring of

health parameters, update the data in a database and then display it on a

website to be accessed only by an authorized person. The authors discuss that

this way doctors can be alerted to any emergency.

The Internet in general and IoT platforms in

particular face security problems and carry privacy concerns [1]. Work in [10]

proposed different security levels and focused

on the security challenges of the wearable devices within healthcare IoT

sector. The security requirements for IoT healthcare environment have also been

addressed in detail by [11], where authors present in-depth review and cost

analysis of Elliptic Curve Cryptography (ECC)-based RFID authentication

schemes. The authors argue that most of the approaches cannot satisfy all

security requirements, whereas only a few recently proposed are suitable for

the healthcare environment. Studies in

[12] propose data accessing method for an IoT-based healthcare emergency

medical service and present its architecture to demonstrate collection,

integration, and interoperation IoT data

from location-related resources such as task groups, vehicles, and medical

records of patients [13].

There are inspiring

applications of the IoT for healthcare to improve hospital workflow, optimize

the use of resources, and provide cost savings. However, there is a need for real and scalable systems to

overcome significant obstacles (like security, privacy, and trust) [14]. For example, [15] introduces a concept of an Internet of m-health Things (m-IoT). It

discusses general architecture for body temperature measurement with an

application example that matches future functionalities of IoT and m-health.

Another work discusses building extensible ad-hoc healthcare application and

presents a prototype of a healthcare monitoring system for alerting doctors,

patients, or patient-relatives [16]. Another prototype reported in [17]

presents an infrastructure for healthcare

and then build an Android-based smart healthcare application. [18] addresses

another application related to Parkinson’s disease (PD), where authors discuss

existing wearable technologies and IoT with emphasis on systems assessment,

diagnostics, and consecutive treatment

options. [19] discusses a general framework for personal healthcare using RFID,

where authors investigate RFID for personal healthcare by implementing a sensors

network to track the quality of local environment and wellness of patients.

Likewise, [20] proposed a secure modern IoT based healthcare system using a Body Sensor Network (BSN) to address security concerns efficiently.

In order to provide context awareness to make disabled

patient’s life easier and the clinical

process more productive, [21] introduces IoT in medical environments to obtain

connectivity with sensors, the patient, and its surroundings. Another work [22]

presents an intelligent system for a

class of disabled people to have access to computers using biometric detection

to improve their interactivity, by using webcam and tracking head movement and

iris. Similarly, [23] presents an IoT architecture stack for visually impaired

and neurologically impaired people, and identifies relevant technologies and IoT

standards for different layers of the architecture. As an application, another

mobile healthcare system based on emerging IoT technologies for wheelchair

users is presented by [24], where the

focus is on the design of a wireless network of body sensors (e.g., electrocardiogram

(ECG) and heart rate sensors), a cushion that detects pressure, sensors in home

environment and control actuators, and so forth.

2.2 Challenges faced by Paralyzed People

The

state of paralyzed people suffering varies as paralysis exists in four

different forms: a: Monoplegia, with one limb paralyzed; b: Hemiplegia, with the leg and arm of

one side paralyzed; c:

Paraplegia, with legs paralyzed or sometimes the lower body and the pelvis; and

d: Tetraplegia/Quadriplegia, with

both the legs and arms paralyzed. Quadriplegia (or tetraplegia) is caused by damage to the cervical spinal cord

segments and may result in function loss in arms and the legs. People suffering

from Monoplegia, Hemiplegia, and Paraplegia can get support to move around and

be functional at home using assistive technology tools, like Home Automation

Assistive Technology, Accessible Video Gaming, and Computing, whereas the condition of people with

quadriplegia needs continuous attention and monitoring. These people with

quadriplegia face many challenges and difficulties even when performing simple

daily activities like communication, and in some cases cannot even move their

body muscles. For such cases, several techniques help those people communicate

through PAS. In this approach, the selection of symbols or characters can be

triggered by eye blinking, which depends on the challenged person’s abilities

[25].

2.3 Current Solutions

The applications that use gesture recognition exist in

various disciplines including healthcare. Current technological developments in

gesture recognition have introduced newer ways to interface with machines for

vital sign monitoring, virtual reality, gaming, among others; however, the

corresponding tools cannot help a paralyzed person as the tools are developed for normal people.

Recent

literature contains real-life

applications in different fields using eye features detection and tracking. For

example, in [26], there is a real-time

eye tracking method to detect eyelid movement (for open or close) to work under

realistic lighting conditions for drowsy driver assistance. Effectively, the authors have developed a

hardware interface involving infrared illuminator together with a software

solution to avoid accidents. For the same objective, [27] investigates visual

indicators that reflect the driver’s condition such as yawn, eye behavior, and

lateral and frontal assent of the head in developing a drowsy driver algorithm

and display interface. In another research [28], the authors develop eye

tracker attached to a head-mounted

display for virtual reality to support learning.

Similarly, the work in [29] also develops a hardware-based

eye tracking and calibration system involving infrared sensor/emitter installed

on a pair of glasses to detect eye gaze.

Eye movements for biometric applications

and address such behavior modality for human recognition are examined in [30]. The relevant acquisition

aspects of eye movements are also discussed.

In another work, a hybrid approach based on neural networks and imperialist

competitive algorithm to work in RGB space is proposed by [31] for skin

classification issues in face detection and tracking.

In terms of approaches or methods for gesture

recognition, researchers have used approaches based on facial features, a

priori knowledge, and appearance to name

a few. For example, the work in [32] detects the face and then the eyes. The

authors from [33] investigate gaze estimation with Scale Invariant Feature

Transform (SIFT), the homography model

and Random Sample Consensus (RANSAC). In other works, classifiers are applied

for extraction of facial features [34] and skin color segmentation along with

Hough transform [35].

The challenge in eye-blink and eyeball movement

detection of the paralyzed person is due to the low frame rate of the camera.

Once the paralyzed person blinks, this generates only a few (~ 25-30) frames.

3. Designing Scanning Keyboard

The

different eye movements like a blink (bat

an eyelid) or eyeball rotation can be mapped

to various keys on the keyboard. In the proposed design, the purpose is to make

keyboard layout simpler (with needed functions only) and compatible with the PAS technique. As per proposed design,

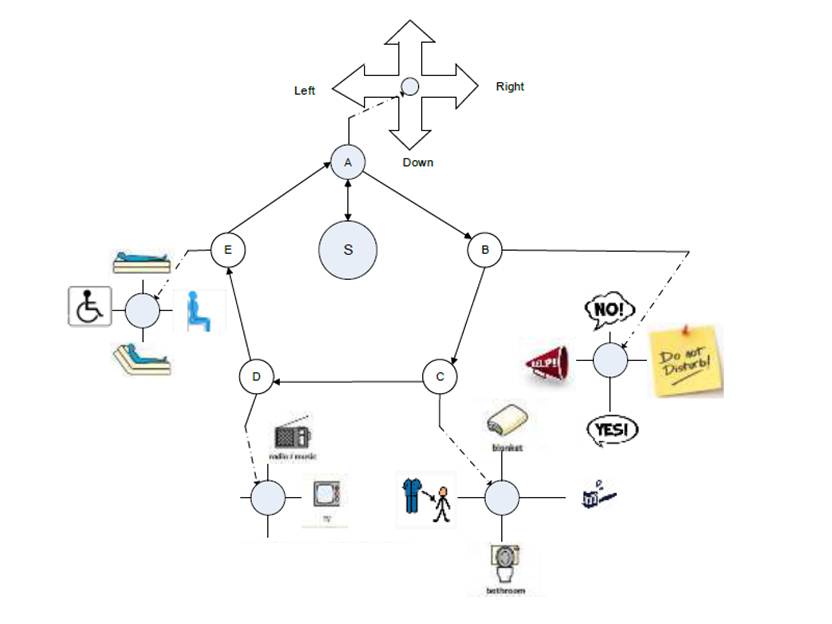

PAS could display alphabets, signs, objects, or web links as shown in Figure 1

to help assisted living (such as communication, mobility, entertainment, and

service) and support rehabilitation. The displayed keyboard has five activity

categories and can be modified to support additional categories.

In Figure 1, the outer five circles (A, B, C, D, and

E) represent the start of each activity. The one in the middle represents the system start or standby position. Blinking enables the selection of the activity

(shown as shaded ‘A’ in Figure 1), whereas no blinking takes to the next

activity in the clockwise direction after pausing two seconds (set

tentatively). If there is no selection after two rounds in the clockwise

direction, the system goes to standby position.

The top activity represents brain exercise, where the user’s up/down/left/right eyeball movement

pushes the shaded ball in the corresponding direction. After two seconds, the

ball comes to the center. Activity B represents communication with the nurse or

any other person. Again, eyeball movement selects the desired message.

Similarly, activity C represents the service needed by the user like a blanket,

changing clothes, brushing teeth, and access to the toilet. The activity D is

for entertainment like watching TV, listening to the radio, accessing sports

website(s), for example. Activity E

supports mobility and seating positioning. Effectively, within each activity,

the desired selection is based on eyeball movement. Once the desired selection is completed and

no more selection is desired, the system

takes to the next activity after pausing for two seconds. The pause speed may

be modified to conciliate the user pace with system sampling intervals. It is

clear from keyboard design that eye-blink and eyeball movements are used all

the time during rehabilitation and assisted living activities.

Figure 1: Proposed eye-tracking based keyboard

4. Eye tracking

Here, eye facial

features and eye tracking captured by a

system go through several consecutive stages. The first stage is camera

calibration, which is needed to be done once, as the user is paralyzed, and the person alone may not use

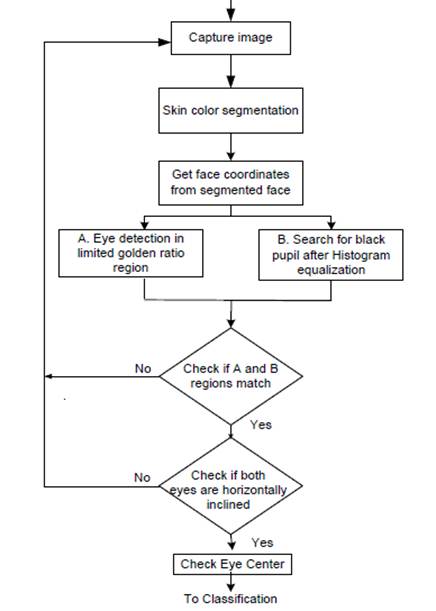

the device (Figure 2 depicts the complete algorithm). The first step logically is to

start the system, which includes the camera

in front of the user. Once the image is

captured, then the face is detected. There are many methods to calibrate, but a software solution based on SVMs was investigated for better accuracy. A set of sequential

equations involving dot product of data points xi and weights w, and variable b as bias are used to

implement SVMs. The classified output y

(as ±1) is determined using the best hyperplane that satisfies [36]:

yi (<w, xi> + b) ≥ 1

given that <w, x> + b

= 0 is a separating classifier line (1)

Figure 2: Steps for Camera Calibration

For simplicity and maximum margin, Lagrange multipliers αi are selected to lie between 0 and C, where

C variable is a constraint to keep ![]() within a limited

range. For computational efficiency, kernels are often applied such that a

function transforms data x from

complex space to linear [36]:

within a limited

range. For computational efficiency, kernels are often applied such that a

function transforms data x from

complex space to linear [36]:

K(x,

y) = <φ(x),

φ(y)> (2)

where φ(x)

and φ(y) mean

transformed support vector values x, and y respectively; <φ(x),

φ(y)>

means dot product of these transformed values; and K(x, y) means equivalent kernel function that

replaces these dot products. The kernel concept is

used in training an SVM to detect

face during calibration. The training

uses a polynomial kernel of order three as

follows:

![]()

with constraints of higher training and

validation accuracy resulting in minimum error rate and maximum correct rate. The number of support vectors and execution

time for training, however, was not considered as a constraint. Based on a

dataset that had favorable samples for the experiment,

a subset of 68 image samples was chosen to be divided into two file sets and

termed as positive and negative respectively. The first positive (set)

contained face image samples, while the second negative (set) contained

non-face image samples. Out of these total 68 image samples, half taken from

each file set served for training SVM,

with the remaining images for validation. During training, values of ![]() were generated using twenty-five support

vectors and resulted in a high accuracy (for the given set of subjects and the

experiments performed) and weight matrix as [36]:

were generated using twenty-five support

vectors and resulted in a high accuracy (for the given set of subjects and the

experiments performed) and weight matrix as [36]:

![]()

![]() (3)

(3)

where ![]() are multipliers, xi

and yi are support vectors, and w are the resulting weights. During training, it was found

out that C=0.00000001 was good enough for

training SVM in the calibration stage to match weight matrix w to face since larger values of C

failed in the corresponding match. While

camera calibration approaches are different, better features for using SVM

approach were noted as (i) no iterations needed, (ii) no hardware requirement,

(iii) only-once training, and (iv) extremely high accuracy for testing results.

are multipliers, xi

and yi are support vectors, and w are the resulting weights. During training, it was found

out that C=0.00000001 was good enough for

training SVM in the calibration stage to match weight matrix w to face since larger values of C

failed in the corresponding match. While

camera calibration approaches are different, better features for using SVM

approach were noted as (i) no iterations needed, (ii) no hardware requirement,

(iii) only-once training, and (iv) extremely high accuracy for testing results.

4.1 Eye-blink Detection

For eye-blink detection, skin color

approach was used in differentiating

between open and closed areas of the eye. The skin color approach uses

histogram back projection, where the histogram represents the user’s skin color. A higher value in a

back-projected image denotes the more likely object location. Thus, within the eye region, a higher percentage of skin color pixels means closed

eyes, otherwise open.

After the

user’s face is detected, a rectangle around the eyes marks a Region of

Interest (ROI). Then, these ROI region

colors are enhanced to offset makeup, lighting, or shadow effects. Effectively,

the color intensities are replaced by the

brightness of the same colors using the

power of gamma (![]() ), where:

), where:

, (4)

, (4)

where 0 < β < 1 is a user-defined value. Once ![]() is

chosen, the image pixels are enhanced by multiplying the ROI pixels with this

value. Next, the enhanced image pixels are converted to grayscale to retrieve

luminance value. Then, the gray scale pixels are enhanced and the contrast

increased to convert it to black and white. In this step, dark areas such as

eye boundaries, pupils, eyelashes, etc., in the grayscale convert to black

color and all light areas, such as skin color convert to white. Effectively,

the procedure maps pixels with a luminance above

0.25 to 1 and 0 otherwise.

is

chosen, the image pixels are enhanced by multiplying the ROI pixels with this

value. Next, the enhanced image pixels are converted to grayscale to retrieve

luminance value. Then, the gray scale pixels are enhanced and the contrast

increased to convert it to black and white. In this step, dark areas such as

eye boundaries, pupils, eyelashes, etc., in the grayscale convert to black

color and all light areas, such as skin color convert to white. Effectively,

the procedure maps pixels with a luminance above

0.25 to 1 and 0 otherwise.

In order to determine whether the eyes are

open or closed, the skin color percentage is

used. In the case of open eyes,

the white area percentage will be less than that of closed eyes. For this

purpose, each ‘0’ pixel adds to the black

area, and each ‘1’ pixel adds to the white

area. The following formula calculates the skin

area percentage:

![]() . (5)

. (5)

An eye-blink corresponds to a frame with a higher skin area percentage in the

ROI. With trial and error method, it was

determined that the skin area percentage exceeds 90% when eyes close.

The value less than that corresponds to open eyes. In order to determine the

accuracy of the approach, twenty trials were run

in different lighting conditions, and it was

found that out of 20 trials, there was only one false result, which

amounts to 95% success rate. The reason behind the false result was poor lighting condition used in that trial, which

resulted in the change of luminance. The success rate of 95% is higher than the

ones reported by [37, 38].

4.2 Eyeball Tracking

In this section, feature extraction such as skin color and

facial measurements are discussed

followed by tracking. Though different methods can be used as reported by [39], an SVM-based method was adopted to test and check performance efficiency

experimentally.

The algorithm used for this part of the work

accomplishes tracking by a trained SVM. Once the user is seated with no head

tilt, the center of the eyes is tracked by two methods. One

uses a trained SVM, while the other uses the black pupil detection method. If

both methods detect the left and right

eyes in the same region, it is considered a success

for eyeball tracking.

Figure 3: Eyeball Detection Steps

The first step is to train an SVM classifier for eye

samples to get acceptable performance. The SVM parameters were varied, and

it was trained with 100 image samples

with each sample cut to size 35´65 include face region only. The purpose of

the dimension was to know, how much of the image area needs to be cut to fit as

a training sample, and later to match the

size of the window used after training.

Other dimensions were also tried such as 35´75 and 45´75, but 35´65 turned out to be optimum. Out of hundred 100 samples, 38 were used

as support vectors. The confidence level of each point was computed by equation (1), using the bias and the weight matrix.

The eye search in the face center (per face golden

ratio reported in [39]) used a moving fixed-sized window similar to the

facial feature extraction method implemented by [40]. For this, the image is sub-divided

into sizes larger than 35´65. The original image was of size 35´625, thus a maximum

of nine (9) sub-images could be obtained for eye search. This number depends on the original image

obtained after cropping the captured image. The position of the window is extracted once eyes are detected based upon

a calculation of weight matrix of trained SVM and its comparison to a

threshold. This threshold was set based on trial and error method and found to be 50%, which means that if

confidence level increases 0.5, then the eye

is detected by SVM.

Tracking results were satisfactory, with both eyes

tracked with all 15 people (both genders aged 11 to 52 - male 6, female 9). To guarantee the accuracy, it examines to see

that eyes are aligned either vertically (up or down) or horizontally (left or

right). As discussed in Section 3, this up, down, left or right movement of the

eyeball is used to map to mouse movement for rehabilitation and gaming

applications. Briefly,

the eyeball-detection steps are shown in

Figure 3.

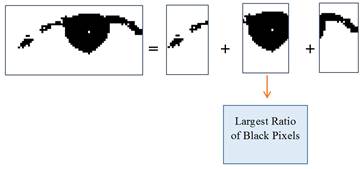

4.3. Classification

As discussed in Section 3, keyboard maps five eyeball

movements: right, left, up, down and center. To

classify the eyeball position, the method of dark pixel detection was

used together with the trained SVM. In order to locate the black pupil, the

histogram equalization was applied along

with known facts in every person as:

![]() of

face width and (6)

of

face width and (6)

![]() Eye width. (7)

Eye width. (7)

Using the ratio of black to white pixels, this results in the detection of eye pupil position, as shown in Figure 4. The center,

right, left, up, or down positions of the eyeballs are used to map to four

possible choices on the keyboard to enable the corresponding function to be executed in the system. As an example, the

center indicates that no function is to be

executed (refer to Figure 5). Figure 6 displays the classification flowchart 6.

Figure 4: Detection of eyeball during classification

stage

Figure 5: Center eyeball - nothing to be

executed; Right eyeball - function to be executed.

Figure 6: Flow chart of the classification phase

5. TESTING RESULTS

The main purpose of the testing

was to check the system that initially trains SVMs for face detection and to observe performance after training. As discussed in Section 4, for

facial features detection, the steps included processing images until a

confidence level is reached. This measure

determines how much an SVM weight matrix resembles an image. If this calculated

measure exceeds a threshold set at 0.5 (as discussed in section 4.2), the

detection is considered a success. During calibration and face detection, this

confidence level was found to be 0.7388

and then compared to a threshold of 0.5 set based on trial and error method.

Table 1: Testing results of the tracking algorithm

|

Test |

Gender |

Environment |

Tracking

Results |

|

1 |

Female (age 30) |

2 fluorescent bulbs and afternoon sunlight |

Both eyeballs tracked successfully in all cases, with a runtime of 0.15 seconds |

|

2 |

Female (age 25) |

2 fluorescent bulbs, afternoon sunlight, black and white patterned scarf |

|

|

3 |

Male (Child) (age 12) |

Only evening sunlight |

|

|

4 |

Female (age 22) |

2 fluorescent bulbs |

|

|

5 |

Female (age 39 ) |

2 fluorescent bulbs and evening sunlight |

|

|

6 |

Male (age 45) |

2 fluorescent bulbs, sunlight and black scarf |

|

|

7 |

Female (Child) (age 15) |

Only afternoon sunlight |

|

|

8 |

Female (age 48) |

2 fluorescent bulbs |

|

|

9 |

Male (age 28) |

2 fluorescent bulbs, afternoon sunlight, colorful floral scarf |

|

|

10 |

Female (age 20) |

Only evening sunlight |

|

|

11 |

Male (age 50) |

Only afternoon sunlight |

|

|

12 |

Female (age 52) |

Only evening sunlight |

|

|

13 |

Male (Child) (age 11) |

2 fluorescent bulbs |

|

|

14 |

Female (Child) (age 13) |

2 fluorescent bulbs |

|

|

15 |

Male (age 25) |

2 fluorescent bulbs and evening sunlight |

People of different ages and gender in an indoor environment with

incandescent bulbs and different periods

of sunlight provided the data. Different lighting conditions helped test

accuracy using an i7 dual-core processor

with built-in webcam and Matlab10. The run time calculated for eye tracking

came out to be 0.15 seconds (Table 1). The sample results are in Figure 7.

Figure

7: (Left: Eyes detected; Right: Tracked eye centers)

The SVM-based approach outperformed [41], which uses the morphology to detect eyes.

The morphology approach failed due to low-resolution webcam under incandescent

lamps in our environment setting. The keyboard, eye-blink, and eyeball tracking

were integrated, and the system was

tested indoors with incandescent bulbs and under periods of sunlight (Table 2).

The success rate is computed as:

![]() (8)

(8)

The authors

in [33] used 1944×1296 sample resolution with an

efficiency of 94%, with single eye detection, and 50% both eye detection,

whereas the authors [35] applied to skin

color segmentation, and circular Hough transform with performance efficiency of

77.78% for single eye detection, and 66.67% both eye detection. Similarly, [22] detected face and later iris detection with best

results amounting to 80% and 70% on left and right click respectively as a

cursor click on the computer screen, whereas authors in [34] use trials to

estimate fatigue detection based on eye-state, with performance accuracy of an

average of 85% on the left and closed

eyes (refer to Table 3).

6. DISCUSSION

The keyboard can be customized

and persons with physical challenges to speak through eyes during

rehabilitation can easily use it. However, more activities or functionalities

can be added. For example, commonly used

symbols, native words or the ones adopted in Augmented and Alternative

Communication (AAC) can be used.

There are many approaches to building an eye tracking solution, as

discussed earlier. Here, in this work, a low-cost (involving only the webcam)

software solution was proposed with comparatively best-tracking results. For calibration, the SVM approach was presented as

it provided 100% results.

Table 2: Testing results of the integrated

system

|

Test |

Activity Selection Result |

Details on eye detection |

|

1 |

No |

correct as ‘center’ |

|

2 |

Yes |

correct as ‘left’ |

|

3 |

Yes |

correct as ‘down’ |

|

4 |

No |

wrong as ‘up’ |

|

5 |

No |

correct as ‘center’ |

|

6 |

No |

correct as ‘center’ |

|

7 |

Yes |

correct as ‘right’ |

|

8 |

No |

correct as ‘center’ |

|

9 |

Yes |

correct as ‘right’ |

|

10 |

Yes |

wrong as ‘right’ |

|

11 |

No |

correct as ‘center’ |

|

12 |

No |

correct as ‘center’ |

|

13 |

Yes |

correct as ‘down’ |

|

14 |

No |

correct as ‘center’ |

|

15 |

Yes |

correct as ‘down’ |

|

16 |

No |

correct as ‘center’ |

|

17 |

No |

correct as ‘center’ |

|

18 |

No |

correct as ‘center’ |

|

19 |

Yes |

correct as ‘left’ |

|

20 |

Yes |

correct as ‘center’ |

|

21 |

Yes |

correct as ‘up’ |

|

22 |

Yes |

correct as ‘down’ |

|

23 |

Yes |

correct as ‘up’ |

|

24 |

Yes |

correct as ‘left’ |

|

25 |

Yes |

correct as ‘down’ |

Table 3: Comparative results

|

|

Research

Work |

Accuracy |

No. of

samples |

|

1 |

[33] |

94%, with single eye detection, and 50% both eye

detection |

50 samples |

|

2 |

[35] |

77.78% single eye detection, and 66.67% both eye

detection |

9 samples |

|

3 |

[22] |

80% and 70% on left and right click |

3 samples |

|

4 |

[34] |

85% on the left

and closed eyes |

Not mentioned |

|

5 |

Proposed Approach |

92% on both eyes detection |

25 samples |

For prototyping purposes, a single-board computer was used that connects

to the keyboard and other peripherals to develop a solution. This platform is

easy to program and debug the functions written for selected activities on the

keyboard, though other platforms can also be

used for IoT prototyping hardware.

To implement

the proposed tracking system, some points should be noted: (1) any object whose

color is similar to skin in the surroundings degrades the performance of the

system, (2) the performance is affected if the person’s face is not aligned

horizontally or the person is not looking straight into the webcam, and (3) the system allows only a distance from 60

cm to 180 cm away from the camera.

The proposed method uses an ordinary webcam available in laptops for 30

frames per second at 640´480 resolution. The algorithm captures one

frame only for processing. The solution can be transported easily to other

hardware platforms such as smartphones with a keyboard and eye tracking implemented

in software.

7. Limitations

Persons

who are

“only” paralyzed are often the minority in real-life clinical settings.

From a clinical point of view, there exist

many other patients, who suffer from different kinds of plegias, motor disabilities etc.,

which have many-fold additional clinically relevant affections and not only

pure paralyzes. The proposed approach in

this work may help with the assumption that the brain of the paralyzed person

is amenable for brain exercise. As said earlier, people with hemiplegia, e.g., may have a heminaopsia. These people suffer from less vision existing in half of the

visual field. Some people might even be suffering less vision on the

same side of both eyes. Likewise, a neurological condition may cause a deficit

to awareness of half of visual field. Obviously, these classes of patients may

not be trained for exercise of nerves. On the other side, many patients are

suffering from comorbidities and multi-morbidity. Therefore, special training

methods might be necessary if at all possible.

Therefore, the approach and

tools developed in this work are applicable for a class of people only and can be considered an important first visionary step to tackle out or

evaluate at a later stage application for

real-life patients, respecting the context in which paralyzes occurred. For blind people or those with very low vision,

there are no solutions as well as for very young children, it is assumed. There is still a long way for further

investigations to follow.

8. CONCLUSIONS

A customized real-time

solution to enhance assisted living of paralyzed persons was developed and tested indoors under varied

lighting conditions for male and female aged 11 to 52. The solution integrated

software keyboard, eye blink, eyeball tracking and classification using an SVM

classifier. The testing results showed a performance

efficiency of 92% with a run time of 0.15

seconds. The performance efficiency can be

improved by involving dual camera or availability of depth sensor

camera, and a faster processor or multicore environment, for any future

enhancements, can further reduce run time. The solution can be used for wheelchair users, on-bed patients,

for instance. The solution can be transported

to different platforms including smartphones. For future work, various improvements can be explored,

for example: (1) SVM performance during classification can be enhanced using

more training samples with additional head orientations; (2) hardware solution

is likely to generate a real-time solution; (3) stand-alone chips might provide

add-on features to the product; and (4) more variation can be allowed in

environmental lighting conditions.

Face recognition can help to guarantee legitimate blinking. Given that

the system needs at least 2 secs to process one command, the system does not

have to work in real time. Since face recognition often requires one dimensionality reduction (in the

feature extraction phase), motion estimation can help to study the face as an expanded ROI [42-46].

9. Conflict of interest statement

We certify that there is no conflict of interest

with any financial organization in the

subject matter or materials discussed in this manuscript.

10. Authors’ biography

Qurban A Memon has contributed at

levels of teaching, research, and community service in the area of electrical

and computer engineering. He graduated from University of Central Florida,

Orlando, US with PhD degree in 1996. Currently, he is working as Associate

Professor at UAE University, College of Engineering, United Arab Emirates. He

has authored/co-authored over ninety publications in his academic career. He

has executed research grants and development projects in the area of

intelligent based systems, security and networks. He has served as a reviewer

of many international journals and conferences; as well as session chair at

various conferences.

11. References

[1] Estrela, V. V., A. C. B. Monteiro, R. P. França, Y. Iano,

A. Khelassi, and N. Razmjooy. Health 4.0: Applications, Management,

Technologies and Review. Medical Technologies Journal, Vol. 2,

no. 4, Jan. 2019, pp. 262-76, https://doi.org/10.26415/2572-004X-vol2iss4p269-276

[2] Light, J., McNaughton, D. (2014).

Communicative competence for individuals who require augmentative and

alternative communication: A new definition for a new era of communication?

Augmentative and Alternative Communication. 30 (1): 1–18.

doi:10.3109/07434618.2014.885080

[3] Miraz, M., Ali, M., (2015). A

Review on Internet of Things, Internet of Everything and Internet of Nano

Things, In Proc. IEEE Int'l Conf. Internet Technologies and Applications, pp.

219-224, Wrexham.

[4] Skiba, D. (2016). Emerging

Technologies: The Internet of Things (IoT), Nursing Education Perspectives,

Vol. 34, No. 1, pp. 63–64. https://doi.org/10.5480/1536-5026-34.1.63

[5] Fernendez, F., Pallis, G.,

(2014). Opportunities and Challenges of the Internet of Things for Healthcare,

Proceedings of Int'l Conf. on Wireless Mobile Communication and Healthcare, pp.

263-266, Athens. https://doi.org/10.4108/icst.mobihealth.2014.257276

[6] Sotiriadis, S., Bessis, N.,

Asimakopoulou, E., Mustafee, N., (2014). Towards Simulating the Internet of

Things, In Proc. of IEEE 28th Int'l Conf. on Advanced Inf. Networking and

Application Workshops, pp. 444-448, Victoria.

[7] Catarinucci, L., Donno, D.,

Mainetti, L., Palano, L., Patrono, L., Stefanizzi, M.., Tarricone, L. (2015).

An IoT-Aware Architecture for Smart Healthcare Systems, IEEE Internet of Things

Journal, Vol., 2, No., 6, pp. 515-526.

[8] Ge, S., Chun, S., Kim, H., Park,

J. (2016). Design and Implementation of Interoperable IoT Healthcare System

Based on International Standards, In Proc. of 13th IEEE Annual Cons. Comm.

& Net. Conf., pp. 119-124.

[9] Gupta, M., Patchava, V., Menezes,

V., (2015). "Healthcare based on loT using Raspberry Pi," Int'l Conf.

on Green Computing and Internet of Things, pp. 796–799, Noida.

[10] Alkeem, E., Yeun, C., Zemerly,

M., (2015). Security and Privacy Framework for Ubiquitous Healthcare IoT

Devices, In Proc. 10th Int'l Conf. for Internet Techn. Sec.Trans., pp. 70-75,

London.

[11] He, D., and Zeadally, S., (2015). An

Analysis of RFID Authentication Schemes for Internet of Things in Healthcare

Environment Using Elliptic Curve Cryptography, IEEE Internet of Things J.,

Vol., 2, No., 1, pp.72-83.

[12] Xu, B., Xu, L., Cai, H., Xie, C., Hu, J., Bu, F. (2014).

Ubiquitous Data Accessing Method in IoT-Based Information System for

Emergency Medical Services, IEEE Trans. Industrial Inf., Vol., 10, No., 2, pp.

1578-1586.

[13] Memon, Q., Khoja, S. (2012).

RFID–based Patient Tracking for Regional Collaborative Healthcare,

International Journal of Computer Applications in Technology, 45 (4), 231-244.

https://doi.org/10.1504/IJCAT.2012.051123

[14] Laplante, P., Laplante, N., (2016).

The Internet of Things in Healthcare-Potential Applications and

Challenges, IT Professional, pp. 2-4, Vol. 18, May-June.

[15] Istepanian, R., Sungoor, A.,

Faisal, A., Philip, N. (2011). Internet of m-Health Things- m-IOT, IET Seminar

on Assisted Living, pp. 1-3, London.

[16] Jiménez, F., Torres, R., (2015).

Building an IoT-aware Healthcare Monitoring System, In Proc. 34th Int'l Conf.

Chilean Computer Science Society, pp. 1-4, Santiago. https://doi.org/10.1109/SCCC.2015.7416592

[17] Mohamed, J. (2014). Internet of

Things: Remote Patient Monitoring Using Web Services and Cloud Computing, In

Proc.IEEE International Conference on Internet of Things, pp. 256–263, Taipei. https://doi.org/10.1109/iThings.2014.45

[18] Pasluosta, C., Gassner, H.,

Winkler, J., Klucken, J., Eskofier, B. (2015). An Emerging Era in the

Management of Parkinson's disease: Wearable Technologies and the Internet of

Things, IEEE J. Biomed Health Inf Vol., 19, No. 6, pp. 1873–1881. https://doi.org/10.1109/JBHI.2015.2461555 PMid:26241979

[19] Amendola, S., Lodato, R., Manzari,

S., Occhiuzzi, C., Marrocco, G., (2014). RFID Technology for IoT-Based

Personal Healthcare in Smart Spaces, IEEE Internet of Things Journal, Vol., 1,

No., 2, pp. 144-152.

[20] Gope, P., Hwang, T., (2016).

BSN-Care: A Secure IoT-Based Modern Healthcare System Using Body Sensor

Network, IEEE Sensors Journal, Vol.16, No. 5, pp. 1368-1376. https://doi.org/10.1109/JSEN.2015.2502401

[21] Jara, A., Zamora, M., and Skarmeta,

A., (2010). An Architecture based on Internet of

Things to Support Mobility and Security in Medical Environments, In Proc 7th

IEEE Consumer Comm. Net. Conf., pp. 1 – 5, Las Vegas.

[22] Bayasut, B., Ananta, G., Muda, A.

(2011). Intelligent Biometric Detection

System for Disabled People, 11th International Conference on Hybrid Intelligent

Systems, pp. 346–350, Melacca. https://doi.org/10.1109/HIS.2011.6122130

[23] Lopes, N., Pinto, F., Furtado, P.,

Silva, J., (2014). IoT Architecture Proposal for

Disabled People, In Proc. 10th IEEE Int'l Conf. Wireless and Mobile Comp., Net.

Communications, pp. 152 – 158, Larnaca.

[24] Yang, L., Ge, Y., Li, W., Rao,

W., Shen, W., (2014). A Home Mobile Healthcare System for Wheelchair Users, In

Proc. of the 18th IEEE Int'l Conf. on Computer Supported Cooperative Work in

Design, pp. 609–614, Hsinchu. https://doi.org/10.1109/CSCWD.2014.6846914

[25] Blackstone, S., Beukelman, D., and

Yorkston, K. (2015). Patient-Provider Communication: Roles for Speech-Language

Pathologists and Other Healthcare Professionals, Plural Publishers.

[26] Ghosh, S., Nandy, T., & Manna, N.

(2015). Real time eye detection and tracking method for driver assistance

system. In Advancements of Medical Electronics (pp. 13-25). Springer, New

Delhi. https://doi.org/10.1007/978-81-322-2256-9_2

[27] Galarza, E. E., Egas, F.

D., Silva, F. M., Velasco, P. M., & Galarza, E. D. (2018). Real Time Driver Drowsiness

Detection Based on Driver's Face Image Behavior Using a System of Human

Computer Interaction Implemented in a Smartphone. In Proc. Int'l Conf. on

Information Theoretic Security, pp. 563-572. Springer, Cham. https://doi.org/10.1007/978-3-319-73450-7_53

[28] Menezes, P., Francisco,

J., & Patrão, B. (2018). The Importance of Eye-Tracking

Analysis in Immersive Learning-A Low Cost Solution. In Online Engineering &

Internet of Things (pp. 689-697). Springer, Cham. https://doi.org/10.1007/978-3-319-64352-6_65

[29] Liu, S. S., Rawicz, A., Ma, T., Zhang, C., Lin, K.,

Rezaei, S., & Wu, E. (2018). An eye-gaze tracking and

human computer interface system for people with ALS and other locked-in

diseases. CMBES Proceedings, 33(1).

[30] Galdi, C., & Nappi, M.

(2019). Eye Movement Analysis in Biometrics. In Biometrics under Biomedical

Considerations, pp. 171-183. Springer, Singapore. https://doi.org/10.1007/978-981-13-1144-4_8

[31] Razmjooy, N., Mousavi, B. S., and

Soleymani, F. (2013). A hybrid neural network Imperialist Competitive Algorithm

for skin color segmentation. Mathematical and Computer Modelling, 57(3-4),

848-856. https://doi.org/10.1016/j.mcm.2012.09.013

[32] Petrisor, D.; Fosalau, C.;

Avila, M.; Mariut, F., (2011). Algorithm for face and eye detection using

colour segmentation and invariant features, In Proc. 34th Int'l Conf. on Tel.

and Signal Proc., vol., no., pp. 564-569, 18-20.

[33] Praglin, M., & Tan, B.,

(2014). Eye Detection and Gaze Estimation. Eye, pp. 1-5

[34] Lin L., Huang C., Ni X., Wang

J., Zhang H., Li X., Qian Z. (2015). Driver fatigue detection based on eye

state, Technology and Health Care: European Society for Engineering and

Medicine, 23(s2): S453-S463 https://doi.org/10.3233/THC-150982 PMid:26410512

[35] Zia, M. A., Ansari, U., Jamil, M.,

Gillani, O., & Ayaz, Y. (2014). Face and Eye Detection in

Images using Skin Color Segmentation and Circular Hough Transform, In Proc.

IEEE Int'l Conf. Rob. Em. A Tec. Eng., pp. 211-213.

[36] Schölkopf B., Platt J.,

Shawe-Taylor J., Smola A., Williamson R., (2001). Estimating the Support of a

High-dimensional Distribution, J. of Neural Computation, Vol. 13, No. 7, pp.

1443-1471 https://doi.org/10.1162/089976601750264965 PMid:11440593

[37] Divjak, M., Bischof, H., (2009).

Eye-blink based fatigue detection for prevention of Computer Vision Syndrome,

In Proc. Conf. on Machine Vision Applications (MVA), pp. 350–353

[38] Heishman, R., Duric, Z., (2007).

Using Image Flow to Detect Eye-blinks in Color Videos, IEEE Workshop on

Applications of Computer Vision, p. 52 https://doi.org/10.1109/WACV.2007.61

[39] Holland, E. (2008). Marquardt's

Phi mask: Pitfalls of Relying on Fashion Models and the Golden ratio to

describe a Beautiful Face, Aesthetic Plastic Surgery, Vo. 32, No. 2, pp.

200-208 https://doi.org/10.1007/s00266-007-9080-z PMid:18175168

[40] Nguyen, M.H., Perez J,. and De la

Torre, F. (2008). Facial Feature Detection with Optimal

Pixel Reduction SVM, In Proc. 8th IEEE Int'l Conf. Aut. Face & G. Recog.,

Amsterdam, pp. 1-6, doi: 10.1109/AFGR.2008.4813372

[41] Dhruw, K. K., (2009). A. Eye

Detection Using Variants of Hough Transform B. Off-Line Signature Verification,

Doctoral dissertation, National Institute of Technology Rourkela.

[42] Krolak, A., and Strumiłło, P.

Eye-blink detection system for human–computer interaction, Univ Access Inf Soc,

11:409–419DOI 10.1007/s10209-011-0256-6.

[43] Coelho, A.M. and Estrela, V.V.

(2012). A Study on the Effect of Regularization Matrices in Motion Estimation,

IJCA (0975 – 8887), Vol 51, 19.

[44] Marins, H. R. and Estrela, V.V. (2016). On the Use of Motion

Vectors for 2D and 3D Error Concealment in H.264/AVC Video. Feature Detectors

and Motion Detection in Video Processing. IGI Global, 2017. 164-186.

doi:10.4018/978-1-5225-1025-3.ch008

[45] Bloehdorn S. et al. (2005).

Semantic Annotation of Images and Videos for Multimedia Analysis. In:

Gómez-Pérez A., Euzenat J. (eds) The Semantic Web: Research and Applications.

ESWC 2005. Lecture Notes in Computer Science, vol 3532. Springer, Berlin,

Heidelberg https://doi.org/10.1007/11431053_40

[46] Memon, Q., Smarter Healthcare

Collaborative Network, Building Next-Generation Converged Networks: Theory,

2013